What Is Edge Computing and Why Is it Important for IoT-companies?

“Edge” designates the fringe of a technological information network where the virtual and the real world meet. In a decentralized IT-architecture, generated data are not processed in the computer center but directly at this transition, and if necessary they will be transferred to the cloud. At this place, edge computing allows the data pre-processing in real time: The data collected are locally condensed according to defined criteria. First results of analysis can now be coupled back directly to the end devices or further processed. Afterwards, it is possible to only transfer relevant and therefore smaller data packages to the cloud that cannot be used alone.

The reduction of the data volume relieves stationary servers and reduces the fixed costs for the data transfer and the cloud. This decentralized processing not only saves resources but also minimizes the risk of data loss outside the plant resp. in case of cyber attacks against the cloud. By means of edge computing it is possible to shorten latency periods, optimize data currents and improve production flows as well as processes.

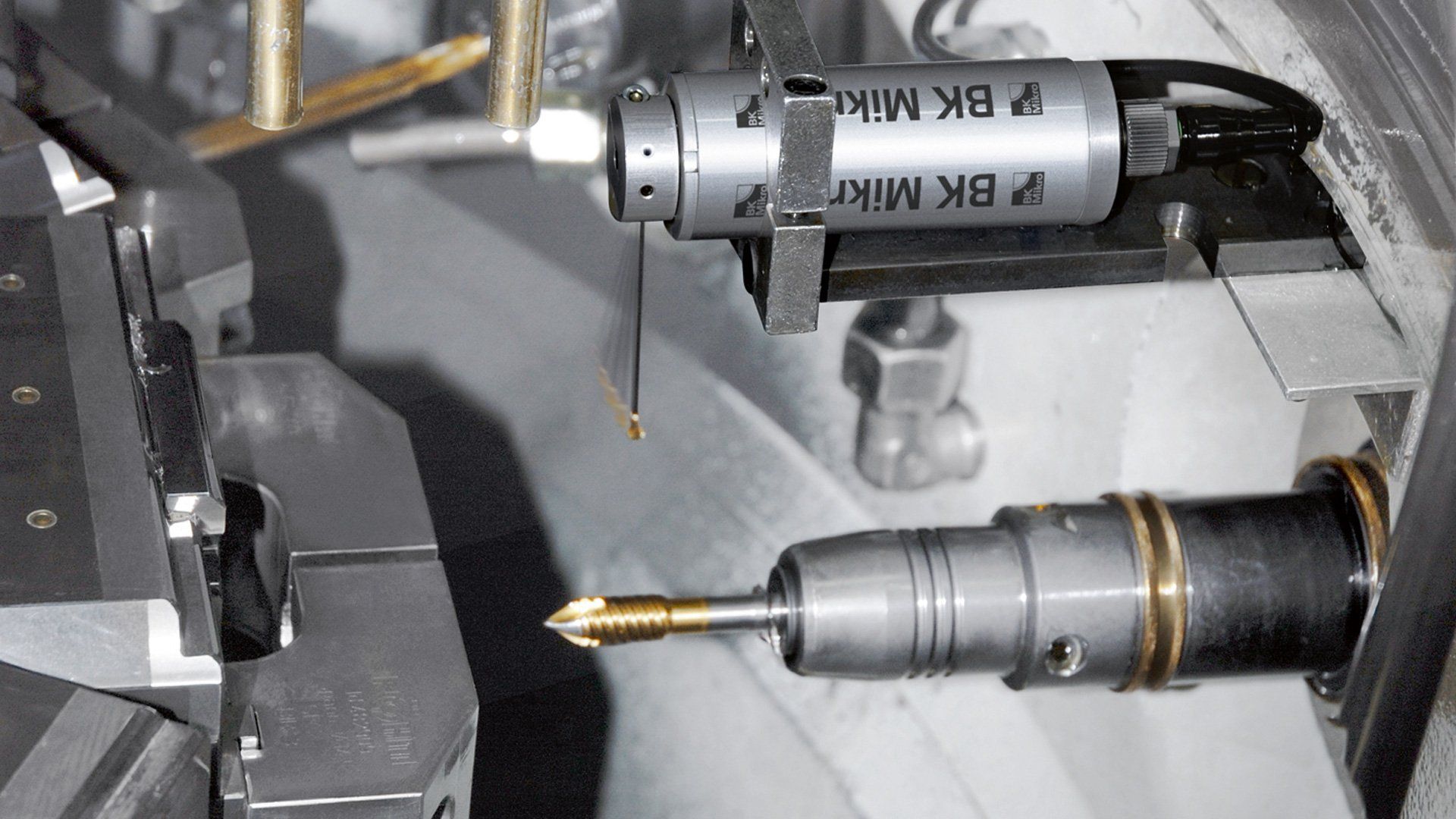

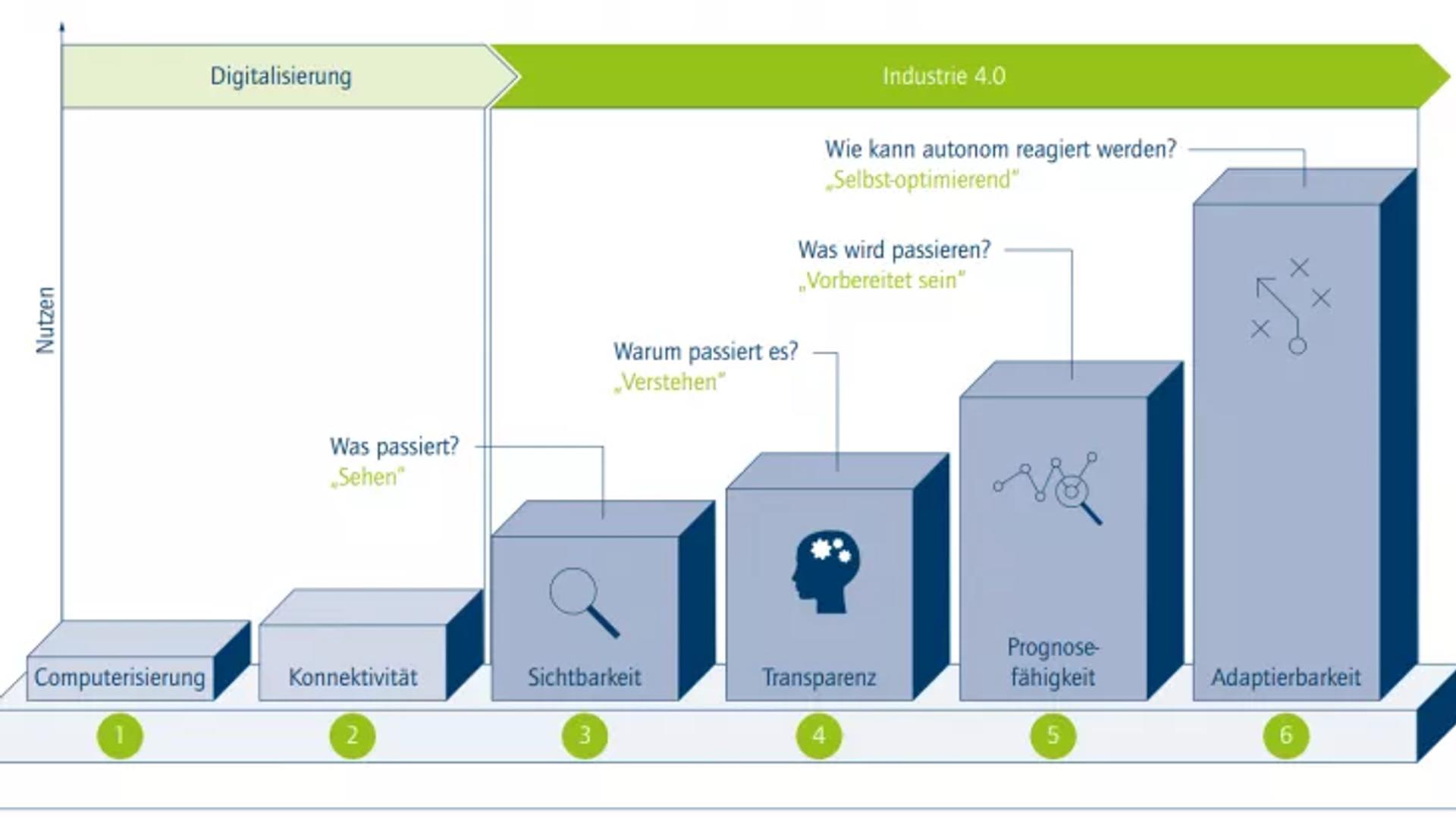

The pre-processing is to be located on the third level of the IoT maturity model [2]: Sensors record a multitude of data points, thus representing processes from the start to the end. In the next step, the data analyzed in the pre-processing according to first relevance criteria become visible. On the fourth and fifth level of the model, the results from this data evaluation are reflected back to the processes in an automated way and applied there in order to be able to deduct causal relations and improve the forecast capability (e.g. predictive maintenance). To give an example, mobile work machines (AGV / AGC) use edge computing for local analysis and only send modification data in real time to the cloud. From there, they receive further tasks or the update for their navigation data.Als „Edge“ (deutsch „Kante“) bezeichnet man den Rand eines technischen Informationsnetzwerks, an dem virtuelle und reale Welt aufeinander treffen. In einer dezentralen IT-Architektur werden anfallende Daten nicht im Rechenzentrum, sondern direkt an diesem Übergang verarbeitet und bei Bedarf in die Cloud verschoben. Edge Computing ermöglicht an dieser Stelle die Datenvorverarbeitung in Echtzeit: Gesammelte Daten werden lokal nach definierten Kriterien verdichtet. Erste Analyseergebnisse können nun direkt an die Endgeräte rückgekoppelt oder weiterverarbeitet werden. Anschließend besteht die Möglichkeit, nur relevante und damit kleinere Datenpakete in die Cloud zu transferieren, die nicht für sich allein nutzbar sind.

Durch die Reduzierung der Datenmenge werden stationäre Server entlastet, aber auch die laufenden Kosten für die Datenübertragung und die Cloud reduziert. Diese dezentrale Verarbeitung schont nicht nur Ressourcen, sondern minimiert auch das Risiko des Datenverlustes außerhalb der Anlage bzw. bei Cyber-Attacken auf die Cloud. Mittels Edge Computing können Latenzzeiten verkürzt, Datenströme optimiert und Produktionsflüsse sowie Prozesse verbessert werden.

Die Vorverarbeitung ist auf der 3. Stufe des Reifegradmodells der Industrie 4.0[2] zu verorten: Sensoren erfassen eine Vielzahl an Datenpunkten und bilden damit Prozesse von Anfang bis Ende ab. Im nächsten Schritt werden die Daten sichtbar, die in der Vorverarbeitung nach ersten Relevanzkriterien analysiert werden. Die Erkenntnisse aus dieser Datenauswertung werden auf der 4. und 5. Stufe des Modells automatisiert in die Prozesse zurück gespiegelt und finden dort Anwendung, um Wirkungszusammenhänge ableiten und die Prognosefähigkeit verstärken zu können (Bsp. Predictive Maintenance). Mobile Arbeitsmaschinen (AGV / AGC) beispielsweise nutzen das Edge Computing zur lokalen Datenanalyse und schicken nur Änderungsdaten in Echtzeit in die Cloud. Von dort erhalten sie weitere Aufgaben oder das Update für ihre Navigationsdaten.

A Compact Connectivity Box PC for Lean Solutions

The Prime Box Pico is one of the smallest industrial box PCs and excels by its high interconnectedness and particularly compact design.

Within the modular system of the product brand Prime Cube, modular edge computing and software concepts are realized, among other things for the innovative Prime Box Pico. Virtualization tools such as a real-time hypervisor allow to integrate existing remote maintenance solutions and operate parallel docker-based applications.

The Prime Box Pico is usable with Windows 10 IoT Enterprise or Linux; on request it can be delivered with an individually preconfigured operating system.

Apart from WLAN, LTE or other radio extensions, the optional CAN bus extension is available as a robust, fast and simple fieldbus that is increasingly used for the solution of small and cost-sensitive applications in machine building.

Edge Gateway: Efficiency, Low Effort and High Data Security

Within the IIoT-framework OT (operational technology) and IT (informational technology) need to be increasingly connected.

This is where the edge gateway is used. Control, data processing and the interface to the cloud level can be integrated in one device.

The resulting edge gateway bundles five major functionalities: Data extracted from the machine, the control/HMI and the data pre-processing describe the actual edge computing. The powerful edge gateway “GS.GATE” also takes over the cloud connection and the remote access down to the sensor level.

The compression to one device involves cost savings, less effort in terms of maintenance and resources and more space in the control cabinet.

The users have just one contact person for control, cyber security and data processing. Thanks to the edge computing solution, the latter one is independent of the actual process task of the machine, which means that the machine continues to run even if the cloud resp. the Internet connection should fail. In this case, the required data are locally buffered and saved until they can be transferred to the cloud again.

Furthermore, the pre-processing ensures that raw data remain with the original source. Sensitive or critical data are better protected, the risk of data abuse is reduced.

The process-oriented data analysis also simplifies the predictive maintenance and quality assurance. Apart from meeting the real-time requirements, this involves an immediate positive effect on productivity.

„Hardware follows Software“ Determines the Future

In the future, the success of appropriate IIoT-solutions will strongly depend on adequate software components while being based on hardware and mechanics as generic elements of the overall concept. Scalable platforms combine both parts and allow the realization of individual requirements.

While in the past interconnectedness used to be very expensive and cumbersome, the optimum connection of sensors from the OT-level to the cloud is now cost-efficient and easy to realize by means of edge computing. Due to the reduced data volume, the connection can be performed with simple and at the same time robust technology.

As an example, the network standard IoT narrowband based on LTE and 5G is geared for a low bandwidth. The simple radio modules only reflect the necessary functions, making the technology particularly cost-efficient, robust and resource-saving.

It allows the direct connection to the cloud without the at times cumbersome integration into the local IT-infrastructure.

The Right Partner Gives Small and Medium-sized Companies Confidence in the Development Process of the Optimum Edge Computing Solution.

The particularly large data volume generated in the IIoT involves a high degree of complexity. Companies have to define very clearly what is happening to the data and how to properly use them, e.g. with respect to predictive maintenance and production processes.

While big companies are usually very well equipped, small and medium-sized companies in the industrial sector resp. automation technology are often quite uncertain about IIoT and edge computing which is usually due to a lack of knowledge or resources. These companies have to rely on competent partners that accompany and consult them in the development process of the complete system architecture.

The development experts from Schubert System Elektronik advise their customers from sensor systems to the control level, including the definition of powerful platforms in hardware and software and the selection of operating systems or virtualization and hypervisor solutions to the optimum connection to the cloud - under consideration of all relevant security aspects for the respective automation architecture.

Autoren der Schubert System Elektronik GmbH:

- Frank Wemken, Entwicklungsleitung

- Alexander Matt, Produktmanager Prime Cube

- Marcus Finkbeiner. Leitung Vertrieb Prime Cube

Quellen:

1 https://www.i40-magazin.de/allgemein/technik/cloud-internet-of-things/splunk-studie-zum-datenzeitalter/ ; https://www.splunk.com/2 Acatech, Industrie 4.0 Maturity Index Update 20203 Abbildung 1: Schuh, G./Anderl, R./Dumitrescu, R./Krüger, A./ten Hompel, M. (Hrsg.): Industrie 4.0 Maturity Index. Die digitale Transformation von Unternehmen gestalten – UPDATE 2020 – (acatech STUDIE), München 2020.